Professional Services

Custom Software

Managed Hosting

System Administration

See my CV here.

Send inquiries here.

Open Source:

tCMS

trog-provisioner

Playwright for Perl

Selenium::Client

Audit::Log

rprove

Net::Openssh::More

cPanel & WHM Plugins:

Better Postgres for cPanel

cPanel iContact Plugins

As my nephews are coming of age, I'm considering taking an apprentice. This has resulted in me thinking more of how I might explain programming best practices to the layman. Today I'd like to focus on performance.

Suppose you had to till, plant and water an arbitrary number of acres. Would you propose ploughing a foot, planting a seed and watering ad nauseum? I suspect not. This is because context switching costs a great deal. Indeed, the context switches involved between planting, seeding and watering will end up being the costliest action when scaling this (highly inefficient) process to many acres.

This is why batching of work is the solution everyone reaches for instinctively. It is from this fact that economic specialization developed. I can only hold so much in my own two hands and can't be in two places at once. It follows that I can produce far more washed dishes or orders being a cook or dish-washer all day than I can switching between the tasks repeatedly.

That said, doing so only makes sense at a particular scale of activity. If your operational scale can't afford specialized people or equipment you will be forced to "wear all the hats" yourself. Naturally this means that operating at a larger scale will be more efficient, as it can avoid those context switching costs.

Unfortunately, the practices adopted at small scale prove difficult to overcome. When these are embodied in programs, they are like concreting in a plumbing mistake (and thus quite costly to remedy). I have found this to be incredibly common in the systems I have worked with. The only way to avoid such problems is to insist your developers not test against trivial data-sets, but worst-case data sets.

When ploughing you can choose a pattern of furroughing that ends up right where you started to minimize the cost of the eventual context switch to seeding or watering. Almost every young man has mowed a lawn and has come to this understanding naturally. Why is it then that I repeatedly see simple performance mistakes which a manual laborer would consider obvious?

For example, consider a file you are parsing to be a field, and lines to be the furroughs. If we need to make multiple passes, it will behoove us to avoid a seek to the beginning, much like we try to arrive close to the point of origin in real life. We would instead iterate in reverse over the lines. Many performance issues are essentially a failure to understand this problem. Which is to say, a cache miss. Where we need to be is not within immediate sequential reach of our working set. Now a costly context switch must be made.

All important software currently in use is precisely because it understood this, and it's competitors did not. The reason preforking webservers and then PSGI/WSGI + reverse proxies took over the world is because of this -- program startup is an important context switch. Indeed, the rise of Event-Driven programming is entirely due to this reality. It encourages the programmer to keep as much as possible in the working set, where we can get acceptable performance. Unfortunately, this is also behind the extreme bloat in working sets of programs, as proper cache loading and eviction is a hard problem.

If we wish to avoid bloat and context switches, both our data and the implements we wish to apply to it must be sequentially available to each other. Computers are in fact built to exploit this; "Deep pipelining" is essentially this concept. Unfortunately, a common abstraction which has made programming understandable to many hinders this.

Object-Orientation encourages programmers to hang a bag on the side of their data as a means of managing the complexity involved with "what should transform this" and "what state do we need to keep track of doing so". The trouble with this is that it encourages one-dimensional thinking. My plow object is calling the aerateSoil() method of the land object, which is instantiated per square foot, which calls back to the seedFurroughedSoil() method... You might laugh at this example (given the problem is so obvious with it), but nearly every "DataTable" component has this problem to some degree. Much of the slowness of the modern web is indeed tied up in this simple failure to realize they are context switching far too often.

This is not to say that object orientation is bad, but that one-dimensional thinking (as is common with those of lesser mental faculties) is bad for performance. Sometimes one-dimensional thinking is great -- every project is filled with one-dimensional problems which do not require creative thinkers to solve. We will need dishes washed until the end of time. That said, letting the dish washers design the business is probably not the smartest of moves. I wouldn't have trusted myself to design and run a restaurant back when I washed dishes for a living.

You have to consider multiple dimensions. In 2D, your data will need to be consumed in large batches. In practice, this means memoization and tight loops rather than function composition or method chaining. Problems scale beyond this -- into the third and fourth dimension, and the techniques used there are even more interesting. Almost every problem in 3 dimensions can be seen as a matrix translation, and in 4 dimensions as a series of relative shape rotations (rather than as quaternion matrix translation).

Thankfully, this discussion of viewing things from multiple dimensions hits upon the practical approach to fixing performance problems. Running many iterations of a program with a large dataset under a profiling framework (hopefully producing flame-graphs) is the change of perspective most developers need. Considering the call stack forces you into the 2-dimensional mindset you need to be in (data over time).

This should make sense intuitively, as the example of the ploughman. He calls furrough(), seed() and water() upon the dataset consisting of many hectares of soil. Which is taking the majority of time should be made immediately obvious simply by observing how long it takes per foot of soil acted upon per call, and context switch costs.

I read a Warren Buffet quote the other day that sort of underlines the philosophy I try to take with my programs given the option:

"We try to find businesses that an idiot can run, because eventually an idiot will run it."This applies inevitably to your programs too. I'm not saying that you should treat your customers like idiots. Idiots don't have much money and treating customers like they are upsets the smart ones that actually do have money. You must understand that they can cost you a lot of money without much effort on their part. This is the thrust of a seminal article: The fundamental laws of human stupidity.

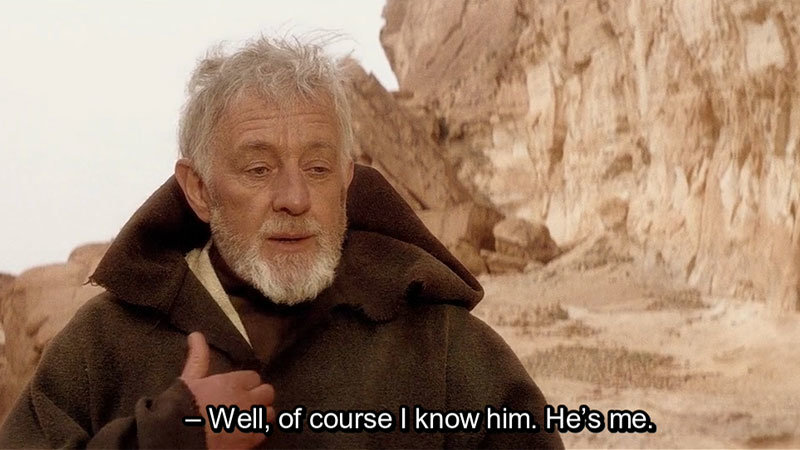

This is why many good programs focus on having sane defaults, because that catches 80% of the stupid mistakes people make. That said, the 20% of people who are part of the "I know just enough to be dangerous" cohort (see illustration) cause 80% of the damage. Aside from the discipline that comes with age (George, why do you charge so much?), there are a few things you can do to whittle down 80% of that dangerous 20%. This usually involves erecting a Chesterton's Fence of some kind, like a --force or --dryrun option. Beyond that lies the realm of disaster recovery, as some people will just drop the table because a query failed.

This also applies to the architecture of software stacks and the business in general (as mentioned by Buffet). I see a lot of approaches advocated to the independent software vendor because "google uses it" and similar nonsense. They've got a huge blind spot they admit freely as "I can't count that low". What has resulted from this desire to "ape our betters" is an epidemic of swatting flies with elephant guns, and vault doors on crack houses. This time could have been spent building win-wins with smart customers or limiting the attack surface exploited by the dumb or malicious.

So long as you take a fairly arms-length approach with regard to the components critical to your stack, swapping one out for another more capable one is the kind of problem you like to have. This means you are scaling to the point you can afford to solve it.

The scientific method is well understood by schoolchildren in theory, but thanks to the realities of schooling systems they are rarely if ever exposed to its actual practice. This is because the business of science can be quite expensive. Every experiment takes time and nontrivial amounts of capital, much of which may be irreversibly lost in each experiment. As such, academia is far behind modern development organizations. In most cases they are not even aware to the extent that we have made great strides towards actually doing experimentation.

Some of this is due to everyone capable of making a difference toward that problem being able to achieve more gainful employment in the private sector. Most of it is due to the other hard sciences not catching up to our way of experimentation either. This is why SpaceX has been able to succeed where NASA has failed -- by applying our way to a hard science. There's also a lack of understanding at a policy level as to why it is the scientifically inclined are overwhelmingly preferring computers to concrete sciences. The Chinese government has made waves of late claiming they wish to address this, but I see no signs as of yet that they are aware how this trend occurred in the first place.

Even if it were not the case that programming is a far quicker path to life-changing income for most than the other sciences, I suspect most would still prefer it. Why this income potential exists in the first place is actually the reason for such preference. It is far, far quicker and cheaper to iterate (and thus learn from) your experiments. Our tools for peer review are also far superior to the legacy systems that still dominate in the other sciences.

Our process also systematically embraces the building of experiments (control-groups, etc) to the point we've got entire automated orchestration systems. The Dev, Staging/Testing and Production environments model works quite well when applied to the other sciences. Your development environment is little more than a crude simulator that allows you to do controlled, ceteris-paribus experiments quickly. As changes percolate upward and mix they hit the much more mutis mutandis environment of staging/testing. When you get to production your likelihood of failure is much reduced versus the alternative. When failures do happen, we "eat the dog food" and do our best to fix the problems in our simulated environments.

Where applied in the other sciences, our approach has resurrected forward momentum. Firms which do not adopt them in the coming years will be outcompeted by those that do. Similarly, countries which do not re-orient their educational systems away from rote memorization and towards guided experimental rediscovery from first principles using tools very much like ours will also fall behind.

I've been writing a bunch of TypeScript lately, and figured out why most of the "Async" modules out there are actually fakin' the funk with coroutines.

Turns out even pedants like programmers aren't immune to meaning drift! I guess I'm an old man now lol.

Article mentioned: Troglodyne Q3 Open Source goals